|

| copy datastore from one google project to another google project |

Its actually happens that we need to copy datastore values from one project to another project of google cloud platform.

Requirements :

- Gcloud SDK on the local machine

This process goes through 4 steps.

- Export datastore from origin project

- Give default service user of target project the legacy storage read role over the bucket

- Transfer bucket data to target project's bucket

- Import datastore file

Step 1 Export Datastore

From Feb 2019, you will not be able to use datastore admin as Google is going stop that support. So you have to use cloud datastore import-export for it.

Cloud datastore import-export exports data in you specified storage bucket. To see how to setup automated datastore import-export refer this post.

Step 2 Give default service user of targe projet the legacy storage read role over the bucket

Here we are giving read right to the bucket for origin project to targe project,

I.E. If you want to copy from project A to project B. Then give legacy storage reader role to the user of project B on the source bucket of project A

|

| set bucket permission to make it accessible by target project's default service user |

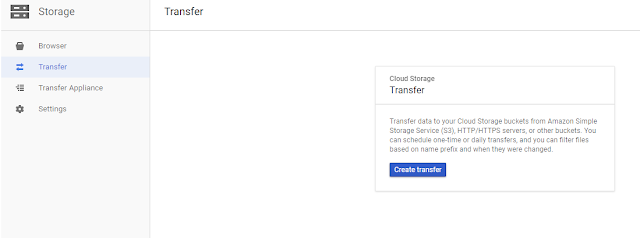

Step 3 Transfer bucket data to the target projects bucket

Actually, in this step, it works like more copy then transfer. We have to specify from where to where we want to copy data.

So let's just start transferring data

|

| Cloud storage transfer |

You will see this page if you transferring data storage for the first time

|

| Select Source bucket |

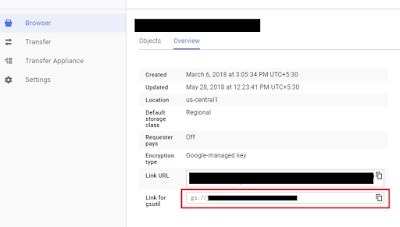

Here source bucket has to be from origin project. You can find bucket from the bucket information. please see below image. ( note bucket URL start with gs:// )

|

| Find origin bucket URL |

Select Destination Bucket in the target project.

|

| Select destination bucket on target project |

So, up till now, we have moved datastore backup from our origin project to targe project.

Now the last step remains which is importing data from cloud storage of target project to datastore of target project.

This step requires GCLOUD SDK on your local machine. I will recommend using latest SDK available at moment. As I have to update my current version.

Run this command in cmd

gcloud datastore import gs://[path-to-the-over_all_export_file].overall_export_metadata

Hope you find it useful.

Stay connected!😁

Now the last step remains which is importing data from cloud storage of target project to datastore of target project.

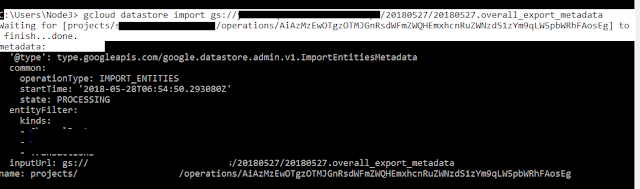

Step 4 import to datastore

This step requires GCLOUD SDK on your local machine. I will recommend using latest SDK available at moment. As I have to update my current version.

Run this command in cmd

|

| Run Gcloud import command |

Hope you find it useful.

Stay connected!😁

Comments

Post a Comment